一、生成jenkins image

cd /data/software/BUSINESS/DockerFile/base/jenkins vim hosts # 指定需要的hosts文件 192.168.0.1git.xxxx.com vim entrypoint.sh # 添加容器启动执行脚本 #!/bin/bash set -x echo 'add route to gitlab......' cat /tmp/hosts >> /etc/hosts /sbin/tini /usr/local/bin/jenkins.sh vim Dockerfile # 编辑dockerfile文件 FROM jenkins/jenkins:2.302 MAINTAINER jiangweihua USER root ADD hosts /tmp ADD entrypoint.sh /entrypoint.sh RUN chmod a+x /entrypoint.sh ENTRYPOINT ["/entrypoint.sh"] docker build --no-cache=true -t 172.24.161.36:80/base/jenkins:v2.302 . #生成jenkins镜像文件 docker push 172.24.161.36:80/base/jenkins:v2.302 #推送镜像到镜像仓库

二、启动jenkins Deployment

cd /data/software/BUSINESS/jenkins vim rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: name: jenkins namespace: kube-ops --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: jenkins rules: - apiGroups: ["extensions", "apps"] resources: ["deployments"] verbs: ["create", "delete", "get", "list", "watch", "patch", "update"] - apiGroups: [""] resources: ["services"] verbs: ["create", "delete", "get", "list", "watch", "patch", "update"] - apiGroups: [""] resources: ["pods"] verbs: ["create","delete","get","list","patch","update","watch"] - apiGroups: [""] resources: ["pods/exec"] verbs: ["create","delete","get","list","patch","update","watch"] - apiGroups: [""] resources: ["pods/log"] verbs: ["get","list","watch"] - apiGroups: [""] resources: ["secrets"] verbs: ["get"] --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: jenkins namespace: kube-ops roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: jenkins subjects: - kind: ServiceAccount name: jenkins namespace: kube-ops vim nginx.yaml apiVersion: v1 kind: Pod metadata: name: nginx-test-pod namespace: kube-ops labels: name: nginx-test-pod spec: containers: - name: nginx-test-pod image: nginx ports: - name: web containerPort: 80 volumeMounts: - name: cephfs mountPath: /usr/share/nginx/html volumes: - name: cephfs persistentVolumeClaim: claimName: jenkins-pvc vim jenkins.yaml --- apiVersion: apps/v1 kind: Deployment metadata: name: jenkins namespace: kube-ops spec: selector: matchLabels: app: jenkins template: metadata: labels: app: jenkins spec: terminationGracePeriodSeconds: 10 serviceAccount: jenkins containers: - name: jenkins #image: jenkins/jenkins:2.302 image: 172.24.161.36:80/base/jenkins:v2.302 imagePullPolicy: IfNotPresent ports: - containerPort: 8080 name: web protocol: TCP - containerPort: 50000 name: agent protocol: TCP resources: limits: cpu: 1000m memory: 1Gi requests: cpu: 500m memory: 512Mi livenessProbe: httpGet: path: /login port: 8080 initialDelaySeconds: 60 timeoutSeconds: 5 failureThreshold: 12 readinessProbe: httpGet: path: /login port: 8080 initialDelaySeconds: 60 timeoutSeconds: 5 failureThreshold: 12 volumeMounts: - name: jenkinshome subPath: jenkins mountPath: /var/jenkins_home securityContext: fsGroup: 1000 volumes: - name: jenkinshome persistentVolumeClaim: claimName: jenkins-pvc --- apiVersion: v1 kind: Service metadata: name: jenkins namespace: kube-ops labels: app: jenkins spec: selector: app: jenkins type: NodePort ports: - name: web port: 8080 targetPort: web #nodePort: 30002 - name: agent port: 50000 targetPort: agent kubectl create -f /data/software/BUSINESS/jenkins/ # 启动jenkins 和nginx代理

系统环境:

参考:https://github.com/easzlab/kubeasz

操作系统centos7

10.25.41.114 master|node(kubeasz 部署节点)

10.25.85.88 node

10.25.106.10 node

一、k8s集群系统初始化

1. 10.25.41.114 部署节点生成ssh-key 分发到集群所有服务器,支持免密登录

2. 安装ansible

yum update;yum install python -y;wget https://bootstrap.pypa.io/pip/2.7/get-pip.py;chmod a+x get-pip.py;python get-pip.py;python -m pip install --upgrade "pip < 21.0";pip install ansible -i https://mirrors.aliyun.com/pypi/simple/

3. 全部集群设备关闭selinux 设置ulimit及优化内核参数,修改后重启服务器

setenforce 0; vim /etc/security/limits.conf * soft nofile 655350 * hard nofile 655360 * soft nproc 655350 * hard nproc 655350 vim /etc/security/limits.d/20-nproc.conf * soft nproc 655350 root soft nproc unlimited vim /etc/sysctl.conf net.ipv4.ip_forward = 1 net.ipv4.conf.default.rp_filter = 1 net.ipv4.conf.default.accept_source_route = 0 kernel.sysrq = 0 kernel.core_uses_pid = 1 net.ipv4.tcp_syncookies = 1 kernel.msgmnb = 65536 kernel.msgmax = 65536 kernel.shmmax = 68719476736 kernel.shmall = 4294967296 net.ipv4.tcp_max_syn_backlog = 131072 net.core.netdev_max_backlog = 131072 net.ipv4.tcp_max_tw_buckets = 6000 net.ipv4.ip_local_port_range = 1024 65535 net.ipv4.tcp_tw_recycle = 1 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_syncookies = 1 net.core.somaxconn = 32768 net.ipv4.tcp_timestamps = 0 net.ipv4.tcp_synack_retries = 2 net.ipv4.tcp_syn_retries = 2 net.ipv4.tcp_fin_timeout = 10 net.ipv4.tcp_mem = 94500000 915000000 927000000 net.ipv4.tcp_max_orphans = 3276800 net.ipv4.tcp_rmem = 4096 87380 4194304 net.ipv4.tcp_wmem = 4096 16384 4194304 net.core.wmem_default = 8388608 net.core.rmem_default = 8388608 net.core.rmem_max = 16777216 net.core.wmem_max = 16777216 vm.nr_hugepages=512

二、 在部署节点编排k8s安装

1. 下载项目源码、二进制及离线镜像

cd /usr/local/src

export release=3.0.0

wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

chmod +x ./ezdown

./ezdown -D # 脚本运行成功后,所有文件(kubeasz代码、二进制、离线镜像)均已整理好放入目录/etc/kubeasz

cd /etc/kubeasz

./ezctl new k8s-01 # 生成集群配置文件2. 修改配置文件,配置集群安装内容及网络模式

vim /etc/kubeasz/clusters/k8s-01/config.yaml

INSTALL_SOURCE: "online"

OS_HARDEN: false

ntp_servers:

- "ntp1.aliyun.com"

- "time1.cloud.tencent.com"

- "0.cn.pool.ntp.org"

local_network: "0.0.0.0/0"

CA_EXPIRY: "876000h"

CERT_EXPIRY: "438000h"

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

ENABLE_MIRROR_REGISTRY: true

SANDBOX_IMAGE: "easzlab/pause-amd64:3.2"

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

DOCKER_STORAGE_DIR: "/var/lib/docker"

ENABLE_REMOTE_API: false

INSECURE_REG: '["127.0.0.1/8"]'

MASTER_CERT_HOSTS:

- "10.25.41.114"

#- "www.test.com"

NODE_CIDR_LEN: 24

KUBELET_ROOT_DIR: "/var/lib/kubelet"

MAX_PODS: 110

KUBE_RESERVED_ENABLED: "yes"

SYS_RESERVED_ENABLED: "no"

BALANCE_ALG: "roundrobin"

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

flannelVer: "v0.13.0-amd64"

flanneld_image: "easzlab/flannel:{{ flannelVer }}"

flannel_offline: "flannel_{{ flannelVer }}.tar"

CALICO_IPV4POOL_IPIP: "Always"

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

CALICO_NETWORKING_BACKEND: "brid"

calico_ver: "v3.15.3"

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

calico_offline: "calico_{{ calico_ver }}.tar"

ETCD_CLUSTER_SIZE: 3

cilium_ver: "v1.4.1"

cilium_offline: "cilium_{{ cilium_ver }}.tar"

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

kube_ovn_ver: "v1.5.3"

kube_ovn_offline: "kube_ovn_{{ kube_ovn_ver }}.tar"

OVERLAY_TYPE: "full"

FIREWALL_ENABLE: "true"

kube_router_ver: "v0.3.1"

busybox_ver: "1.28.4"

kuberouter_offline: "kube-router_{{ kube_router_ver }}.tar"

busybox_offline: "busybox_{{ busybox_ver }}.tar"

dns_install: "yes"

corednsVer: "1.7.1"

ENABLE_LOCAL_DNS_CACHE: true

dnsNodeCacheVer: "1.16.0"

LOCAL_DNS_CACHE: "169.254.20.10"

metricsserver_install: "yes"

metricsVer: "v0.3.6"

dashboard_install: "yes"

dashboardVer: "v2.1.0"

dashboardMetricsScraperVer: "v1.0.6"

ingress_install: "yes"

ingress_backend: "traefik"

traefik_chart_ver: "9.12.3"

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "12.10.6"

HARBOR_VER: "v1.9.4"

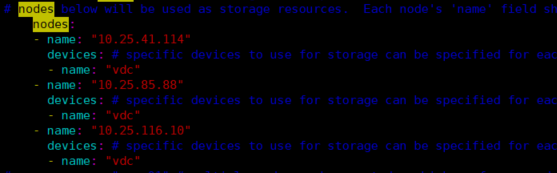

vim /etc/kubeasz/clusters/k8s-01/hosts

[etcd]

10.25.41.114

10.25.85.88

10.25.116.10

[kube_master]

10.25.41.114

[kube_node]

10.25.85.88

10.25.116.10

[harbor]

[ex_lb]

[chrony]

10.25.41.114

10.25.85.88

10.25.116.10

[all:vars]

CONTAINER_RUNTIME="docker"

CLUSTER_NETWORK="calico" #网络使用calico

PROXY_MODE="ipvs"

SERVICE_CIDR="10.68.0.0/16"

CLUSTER_CIDR="172.20.0.0/16"

NODE_PORT_RANGE="0-65535" # node 使用端口范围

CLUSTER_DNS_DOMAIN="cluster.local."

bin_dir="/opt/kube/bin"

base_dir="/etc/kubeasz"

cluster_dir="{{ base_dir }}/clusters/k8s-01"

ca_dir="/etc/kubernetes/ssl"

3. 一键安装k8s 集群

./ezctl setup k8s-01 all

4. calico 网络模式mtu 值tunl网卡需预留30,30<=真实网卡,如数值不正确在master节点执行

kubectl patch configmap/calico-config -n kube-system --type merge -p '{"data":{"veth_mtu": "1430"}}'

kubectl rollout restart daemonset calico-node -n kube-system6. 将calico网络生成的证书复制到所有节点,以防dashboard-metrics-scraper 落在无证书文件的节点导致启动失败

scp /etc/calico.tar.gz root@:node:/etc/

5. 生成dashboard 页面客户端证书(dashboard 页面401错误)

cd /etc/kubeasz/clusters/k8s-01/ssl;

openssl pkcs12 -export -out admin.pfx -inkey /etc/kubeasz/clusters/k8s-01/ssl/admin.pem -certfile /etc/kubeasz/clusters/k8s-01/ssl/ca.pem # 是否需要密码自行决定

或者:openssl pkcs12 -export -out admin.pfx -inkey admin-key.pem -in admin.pem -certfile ca.pem

下载生成的证书点击安装到本地电脑即可

# 获取kubernetes Dashboard token

kubectl describe secret -n kube-system $(kubectl get secret -n kube-system|grep admin-user|awk '{print $1}')5. 检查集群状态:

kubectl get node kubectl top node kubectl top pod -A

6. 如果需要配置master 允许调度pod:

kubectl uncordon 10.25.41.114 #允许master节点调度 kubectl cordon 10.25.41.114 # 禁止调度

7. 设置节点污点:

kubectl taint node [node] key=value[effect] [effect] 可取值: [ NoSchedule | PreferNoSchedule | NoExecute ] NoSchedule: 一定不能被调度 PreferNoSchedule: 尽量不要调度 NoExecute: 不仅不会调度, 还会驱逐Node上已有的Pod

ceph 由c++开发的开源自由组件,设计初衷是设计一个统一的分布式存储系统,提供较好的性能,可靠性和可扩展性

优势:

1. 高扩展性:可部署在普通的X86服务器,支持10-1000台服务器,支持TB倒EB级的扩展

2. 高可靠性:没有单点故障,多数据副本,自动管理,自动修复

3. 高性能:数据分布均衡,性能利用率高

4. 存储类型多样:可用于对象存储,块设备存储和文件系统存储

架构:

1. 基础存储系统:rados 分布式对象存储,用于存储ceph系统中的用户数据,管理组件等,所有操作类,存储类内容事实上由该层完成

2. 基础库librados:该层对基础存储rados进行了抽象和封装,并提供像上层级的API接口,可利用该层对基础存储层rados进行应用开发

3. 应用接口层:该层作用是在librados库的基础上提供更高级抽象,作用于应用或客户端使用的接口

1. radosgw:对象存储网关接口

2. rdb:块存储接口

3. cephfs:文件系统存储接口

基本组件:

1. osd:用于集群种所有数据和对象的存储,处理集群数据复制,恢复,回填,均衡,osd之间会向对方发送守护进程心跳,并提供mon调用的监控状态信息

2. mds(非必须):只有当使用文件系统存储时才需要配置,mds类似于元数据的代理缓存服务器,使用cephfs 文件系统时,就需要配置mds节点

3. monitor:监控整个集群状态,维护集群的cluster map二进制表,保障集群数据的一致性。

4. manager(ceph-mgr):用于收集ceph集群状态,运行指标,比如存储利用率,当前性能指标和负载情况,对外提供ceph-ui页面和resetfulapi接口

参考文档:

rook ceph 文档地址:https://rook.github.io/docs/rook/v1.4/ceph-quickstart.html

cephfs 自动pvc 多点挂载:https://github.com/kubernetes-retired/external-storage/tree/master/ceph/cephfs

安装部署cephfs

1. 挂载裸磁盘并确认裸设备是否处于清空状态,确保不能有任何文件系统

#!/bin/bash dd if=/dev/zero of=/dev/vdc bs=1M count=100 oflag=direct,dsync ls /dev/mapper/ceph‐* | xargs ‐I% ‐‐ dmsetup remove % rm ‐rf /dev/ceph‐* rm ‐rf /var/lib/rook/

#lsblk -f #查看裸设备状态

2. 克隆rook 项目(本文使用1.4版本)

git clone https://github.com/rook/rook.git cd rook git checkout -b release-1.4 remotes/origin/release-1.4;git branch -a

3. 安装ceph集群

cd rook/cluster/examples/kubernetes/ceph kubectl create ‐f common.yaml kubectl create ‐f operator.yaml kubectl create ‐f cluster.yaml # 只需要修改配置裸辞盘信息

kubectl create ‐f toolbox.yaml # 进入到toolbox 中查看ceph 状态,HEALTH_OK及ceph集群部署成功 ceph status ceph osd status

4. 部署cephfs之启动mds,启动后进入toolbox 中查看mdscephfs mds状态

cd rook/cluster/examples/kubernetes/ceph vim filesystem.yaml # 将CephFileSystem 启动名字改成和后面sc pvc 配置一致 apiVersion: ceph.rook.io/v1 kind: CephFilesystem metadata: name: cephfs namespace: rook-ceph kubectl create -f filesystem.yaml

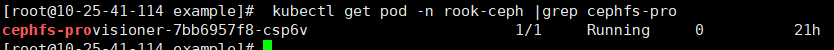

5. 安装provisioner插件(不使用该插件会造成无法多个容器同时写)

wget https://github.com/kubernetes-retired/external-storage/archive/refs/heads/master.zip 该插件需用到的ceph认证keyring到k8s的secret中,进入到toolbox 输入下面命令获取secret ceph auth get-key client.admin 将secret 拷贝到本地文件./secret kubectl create secret generic ceph-secret --from-file=./secret --namespace=rook-ceph kubectl get secret # 查看证书是否生成 cd external-storage-master/ceph/cephfs/deploy/rbac #修改目录下所有*.yaml的namespace 为rook-ceph #注意deployment.yaml PROVISIONER_SECRET_NAMESPACE 也需要修改 kubectl create -f deploy/rbac/ #创建provisioner

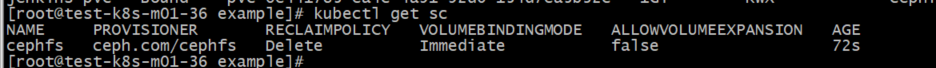

6. 创建storageclass(SC)

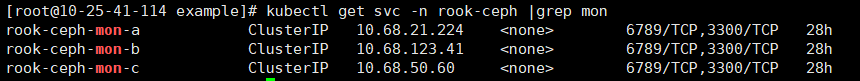

查看ceph集群中monitor 地址

[root@10-25-41-114 example]# kubectl get svc -n rook-ceph |grep mon

cd external-storage-master/ceph/cephfs/example vim class.yaml # 修改monitor地址和端口为现有ceph集群monitor地址,并将sc名字和ns改成和CephFilesystem一致 kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: cephfs provisioner: ceph.com/cephfs parameters: monitors: 10.68.132.102:6789,10.68.146.234:6789,10.68.219.9:6789 adminId: admin adminSecretName: ceph-secret adminSecretNamespace: "rook-ceph" claimRoot: /pvc-volumes kubectl create -f class.yaml # 创建sc provisioner: ceph.com/cephfs # provisioner名字需和启动的ceph-provisioner一致 kubectl get sc

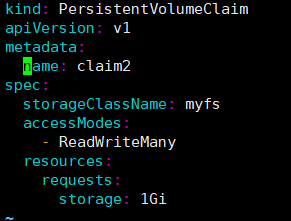

7. PersistentVolumeClaim(pvc)

vim /etc/systemd/system/kube-apiserver.service #启用selfLink --feature-gates=RemoveSelfLink=false systemctl restart kube-apiserver #修改后重启kube-apiserver vim claim.yaml # 修改sc名字为上面配置的myfs

kubectl get pvc # 查看pvc状态Bound为正常

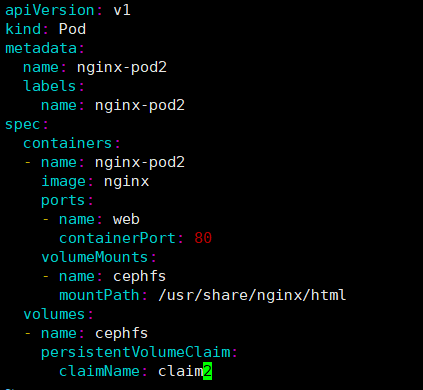

8. 部署测试容器挂载pvc,启停服务看数据是否持久化

vim nginx-test.html

kubectl create -f nginx-test.yaml # 启动后进入容器df -h查看挂载情况

挂载cephfs sudo mount -t ceph 10.68.68.248:6789,10.68.11.46:6789,10.68.167.91:6789:/ /mnt/jwh -o name=admin,secret=AQBI2aRg0XyWDxAANJKzQ/GUmH6VZBcxNx90JQ==

一、安装包下载地址:https://github.com/goharbor/harbor/releases

二、下载安装harbor

cd /data/software/BUSINESS/HARBOR wget https://github.com/goharbor/harbor/releases/download/v1.9.4/harbor-offline-installer-v1.9.4.tgz tar xzvf harbor-offline-installer-v1.9.4.tgz vim harbor/harbor.yml #配置harbor hostname: 172.24.161.36 harbor_admin_password: 6LLGZHBjx74i database: # The password for the root user of Harbor DB. Change this before any production use. password: 6LLGZHBjx74i # The maximum number of connections in the idle connection pool. If it <=0, no idle connections are retained. max_idle_conns: 50 # The maximum number of open connections to the database. If it <= 0, then there is no limit on the number of open connections. # Note: the default number of connections is 100 for postgres. max_open_conns: 100 # The default data volume data_volume: /data/harbor-data docker network create -d bridge --subnet 10.20.30.0/24 harbor_harbor # 创建harbor网络 docker network ls # 查看创建的网络 ./install.sh # 脚本安装harbor docker-compose start # 启动harbor docker-compose ps # 查看harbor 相关服务启动状态

三、打开浏览器输入http://ip:port访问harbor